How you should not write code | chapter 2 | JavaScript

Write a better and clean code in JavaScript with small tips.

Writing a JavaScript code requires less effort. However, Due to its dynamic nature. It is hard to maintain and scale. To write better code, you need to understand its pitfalls. In this article, I will cover the common misleading APIs and patterns in JavaScript.

“The more I learn, the more I realize how much I don’t know.” — Albert Einstein

This article is a continuation of my previous article How you should not write code. If you have not read it, I will suggest you please read it.

1. Requiring (Begging) is not good

I have seen code where reading a JSON file, developers use [require](https://nodejs.org/api/modules.html#modules_require_id). However, require is not that performant as you think. Let’s explore with an example.

Create a user.json inside the folder [require_files]. I am showing a small code snippet of a big JSON file. However, you can download the longer version from Github.

// require_files/user.json

[

{

"_id": "5dea8219b7e4e8016a6661e0",

"index": 0,

"address": "231 Vandervoort Place, Hollins, South Carolina, 2782",

"about": "Ex nulla do duis exercitation Lorem occaecat veniam tempor voluptate laboris adipisicing proident incididunt incididunt. Aliqua enim duis quis esse eiusmod eiusmod tempor velit duis qui anim in. Ipsum incididunt occaecat eu sint. Ut nulla est commodo laborum minim incididunt proident nostrud consectetur tempor. Eiusmod aute tempor eu aute enim nulla ut aliquip. Pariatur consequat tempor tempor irure sunt cillum. Ullamco Lorem Lorem ullamco enim ex elit ut pariatur in qui.\r\n",

"greeting": "Hello, Cherie Webster! You have 7 unread messages.",

"favoriteFruit": "strawberry"

}

]

Let’s create the main function:

// req.js

function main() {

console.time("REQUIRE");

const user = require("./user.json");

console.timeEnd("REQUIRE");

console.time("REQUIRE-2");

const userJson = JSON.parse(fs.readFileSync(__dirname + "/user.json"));

console.timeEnd("REQUIRE-2");

}

main();

// output

// REQUIRE: 0.989ms

// REQUIRE-2: 0.245ms

If you notice the timing of the require and readFileSync, it is drastically different. The reason, require the method does a lot of things behind the scenes (like caching).

Let’s see another version of it inside a loop.

function main() {

console.time("REQUIRE");

const user = require("./user.json");

console.timeEnd("REQUIRE");

console.time("REQUIRE-2");

const userJson = JSON.parse(fs.readFileSync(__dirname + "/user.json"));

console.timeEnd("REQUIRE-2");

console.time("REQUIRE-FOR");

for (let e = 0; e < 10000; e++)

require("./user.json");

console.timeEnd("REQUIRE-FOR");

console.time("REQUIRE-FOR-2");

for (let e = 0; e < 10000; e++)

JSON.parse(fs.readFileSync(__dirname + "/user.json"));

console.timeEnd("REQUIRE-FOR-2");

}

main();

// Output

// REQUIRE: 0.956ms

// REQUIRE-2: 0.190ms

// REQUIRE-FOR: 164.483ms

// REQUIRE-FOR-2: 663.703ms

Here in for-loop, I have required the same file again and again. Since require is cached, require is very fast compared to our version of [fs.readFileSync](https://nodejs.org/api/fs.html#fs_fs_readfilesync_path_options). Let’s create a cached requireJSON utility library.

Here you can clearly see the performance difference: requireJSON is 40 times faster than the require.

// util.js

const fs = require("fs");

let files = {};

function requireJSON(path) {

if (!files[path]) {

files[path] = JSON.parse(fs.readFileSync(path));

}

return files[path];

}

module.exports = {

requireJSON

};

// req.js

const fs = require("fs");

const { requireJSON } = require("./util");

function main() {

// ...Rest of the code

console.time("REQUIRE-FOR-NEW");

for (let e = 0; e < 10000; e++) {

require("./user.json");

}

console.timeEnd("REQUIRE-FOR-NEW");

console.time("REQUIRE-FOR-NEW-2");

for (let e = 0; e < 10000; e++) {

requireJSON(__dirname + "/user.json");

}

console.timeEnd("REQUIRE-FOR-NEW-2");

}

main();

// Output

// REQUIRE: 0.737ms

// REQUIRE-2: 0.196ms

// REQUIRE-FOR: 170.930ms

// REQUIRE-FOR-2: 608.423ms

// REQUIRE-FOR-NEW: 147.607ms

// REQUIRE-FOR-NEW-2: 3.071ms

Note: It doesn’t mean we should not stop using require. require is mainly to load JS files. It has a lot of functionality. However, whenever you are dealing with JSON(static) files. I would recommend creating a small utility function or using any library.

Code: https://github.com/deepakshrma/how-not-to-write-code/tree/master/js/require_files

2. Don’t use Sync APIs

In the above example, I talked a lot about require. However, you should not use any sync I/O in Node.js. It drastically reduces your app performance.

//util.js

function requireFile(path) {

if (!files[path]) {

files[path] = fs.readFileSync(path);

}

return files[path];

}

// sync_server.js

const http = require('http');

const {requireFile} = require("../require_files/util")

const server = http.createServer();

server.on('request', async (req, res) => {

res.end(requireFile(__dirname+"/users.json"));

});

server.listen(8080);

// no_sync_server.js

const http = require('http');

const fs = require('fs');

const server = http.createServer();

server.on('request', async (req, res) => {

fs.createReadStream(__dirname+"/users.json").pipe(res)

});

server.listen(8080);

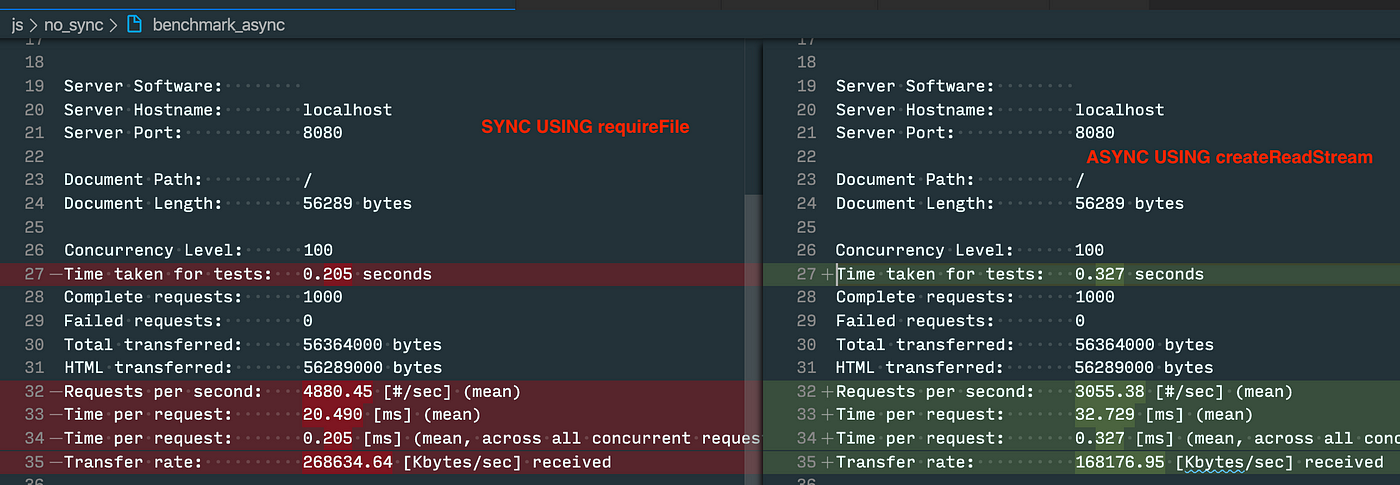

AB Test benchmark: ab -n 1000 -c 100 [http://localhost:8080/](http://localhost:8080/)

Benchmark: Sync vs Stream

Note: The sync version using the caching requireFile function

The performance of the sync file version seems better than createReadStream. However, the sync version is using cache API and we can’t cache 2GB files like this. Let’s remove the cache.

// utils.js

function requireFileNoCache(path) {

return fs.readFileSync(path);

}

// js/no_sync/sync_server_no_cache.js

const http = require('http');

const {requireFileNoCache} = require("../require_files/util")

const server = http.createServer();

server.on('request', async (req, res) => {

res.end(requireFileNoCache(__dirname+"/users.json"));

});

server.listen(8080);

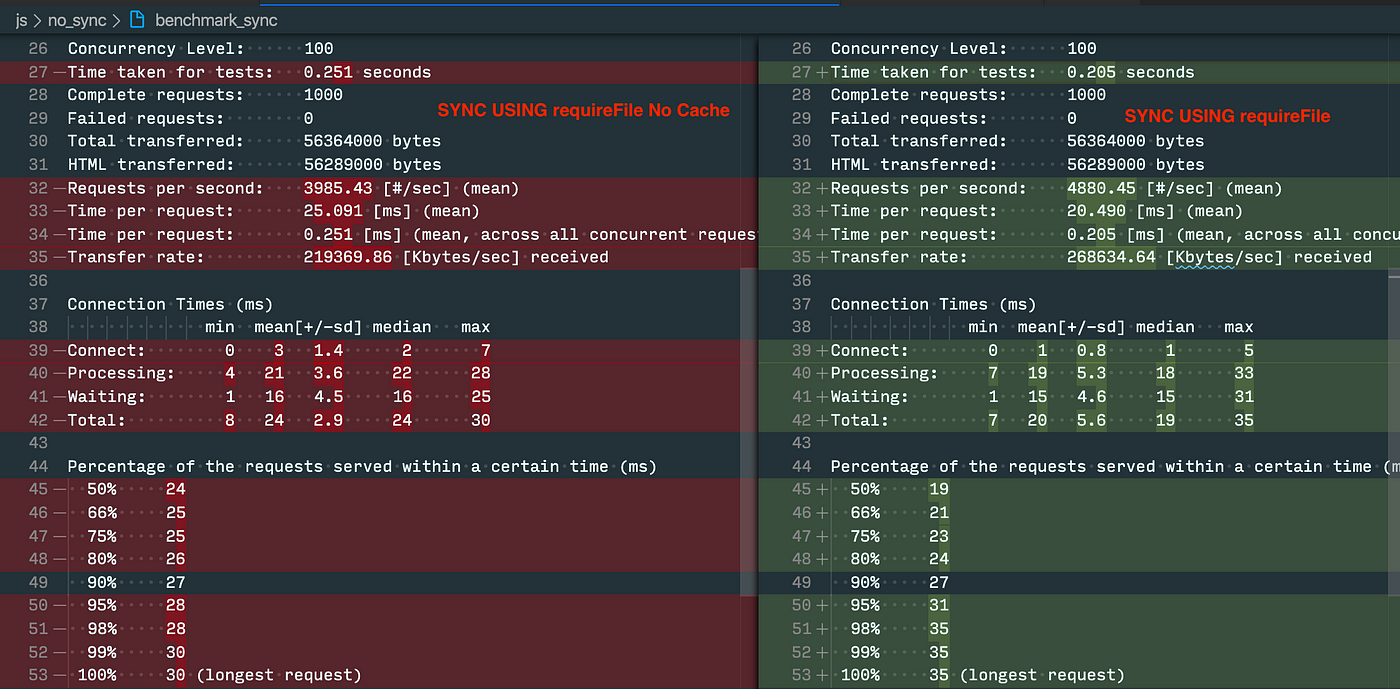

Benchmark: Sync No Cache vs Sync

As you can see by just removing the cache, the performance has reduced 20%, and the file is very small. Think about if the file size is too big. It can really impact your server **concurrency**.

Code: https://github.com/deepakshrma/how-not-to-write-code/tree/master/js/no_sync

3. Hey Underscore, You’re Doing It Wrong:

I really like the video of Brian Lonsdorf (DrBoolean). Here in this video, He explained how underscore is doing wrong. If you are really interested in functional programming I would recommend watching this talk.

Let’s see a small example from his talk.

function firstLetters(words) {

return _.map(words, (word) => {

return _.first(word)

})

}

console.log(firstLetters(["Test", "Rest"]))

// [ "T", "R"]

In the above example, firstLetters is trying to get the first character of words in an Array. If we use a [**partial function**](https://scotch.io/tutorials/javascript-functional-programming-explained-partial-application-and-currying)(curry) concept. We can reduce the code and make it more readable.

Let’s see my own version of [**partial function**](https://scotch.io/tutorials/javascript-functional-programming-explained-partial-application-and-currying).

const first = function(str) {

return str[0];

};

const map = function(fun, arr) {

return arr.map(fun);

};

console.log(first("Test"));

console.log(map(first, ["Test", "Rest"]));

// ["T", "R"]

In the above example, nothing is fancy. first returns the first character of the word and map just iterate.

const curry = (fn, arity = fn.length, ...args) =>

arity <= args.length ? fn(...args) : curry.bind(null, fn, arity, ...args);

const first = function(str) {

return str[0];

};

const map = curry(function(fun, arr) {

return arr.map(fun);

});

const firstLetters = map(first);

console.log(firstLetters(["Test", "Rest"]));

// ["T", "R"]

If you noticed, I have added just a small utility function curry which helps us to make any function a partial function. Using that now we can make our firstLetters function more readable/reusable.

Code: https://github.com/deepakshrma/how-not-to-write-code/blob/master/js/partial_fun.js

4. Object APIs are not functional

The object class APIs are not functional in nature. When I say so, we have to be aware of the facts. Let’s take a very basic example. Suppose we have an object of the country code and name where the key is code and value is the name. And we want to convert it into an array of country objects.

const obj = {

"IN": "India",

"SG": "Singapore",

"US": "United State Of America",

"JP": "JAPAN",

"UK": "United Kingdom"

}

// get all keys

console.log(Object.keys(obj))

// get all key-value pair entries

console.log(Object.entries(obj))

// get all countries array

console.time("entries")

console.log(Object.entries(obj).map(([code, name]) => {

return {

name,

code

}

}))

console.timeEnd("entries")

// Output

[ { name: 'India', code: 'IN' },

{ name: 'Singapore', code: 'SG' },

{ name: 'United State Of America', code: 'US' },

{ name: 'JAPAN', code: 'JP' },

{ name: 'United Kingdom', code: 'UK' } ]

Here this example works perfectly. Things to be noted, Object.entries itself is a for-loop and after that, we call map function. Meaning, we are looping twice for the same thing.

// flatObject in array

console.time("flatObject")

const flatObject = (obj) => {

let array = []

for(let key in obj) {

array.push({name: obj[key], code: key})

}

return array

}

console.log(flatObject(obj))

console.timeEnd("flatObject")

//Output:

[ { name: 'India', code: 'IN' },

{ name: 'Singapore', code: 'SG' },

{ name: 'United State Of America', code: 'US' },

{ name: 'JAPAN', code: 'JP' },

{ name: 'United Kingdom', code: 'UK' } ]

flatObject: 0.415ms

The code is the same as above but with a single time loop. The performance has increased significantly. In this solution, the conversion logic is tightly coupled within the function. Let's decouple it.

// flatObject in array, with mapper function

console.time("flatObjectFn")

// fun object, function -> object[]

const flatObjectFn = (obj, fn) => {

let array = []

for(let key in obj) {

array.push(fn(key, obj[key]))

}

return array

}

console.log(flatObjectFn(obj, (key, value) => ({name:value, code: key})))

console.timeEnd("flatObjectFn")

//Output

[ { name: 'India', code: 'IN' },

{ name: 'Singapore', code: 'SG' },

{ name: 'United State Of America', code: 'US' },

{ name: 'JAPAN', code: 'JP' },

{ name: 'United Kingdom', code: 'UK' } ]

flatObjectFn: 0.128ms

This works the same as the above function. However, we have decoupled the conversion logic into a **mapper** function. And somehow it's more performant than the old version.

Code: https://github.com/deepakshrma/how-not-to-write-code/blob/master/js/object_apis.js

5. Let’s Promise you won't reject me

I personally love the [Promise](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Promise) API. However, I see people struggle a lot with promises. The biggest challenge is to work with multiple promises running in parallel.

Here is one common problem we can solve. Suppose we have to hit 2 different async APIs and we want to collect data from these two APIs.

Let’s see the solution.

const delay = (fn, wait, ...args) => setTimeout(fn, wait, ...args);

const resolve = () => {

return new Promise(r => {

delay(r, 1000, "SUCCESS");

});

};

let all = Promise.all([resolve(), resolve()]);

all.then(console.log).catch(console.error);

// [ 'SUCCESS', 'SUCCESS' ]

Note: deley is a small utility function to simulate async I/O.

All looks good. However, there is an issue. What if one of the call fails??

const delay = (fn, wait, ...args) => setTimeout(fn, wait, ...args);

const resolve = () => {

return new Promise(r => {

delay(r, 1000, "SUCCESS");

});

};

const reject = () => {

return new Promise((_, r) => {

delay(r, 800, "FAIL");

});

};

let all = Promise.all([resolve(), reject()]);

all.then(console.log).catch(console.error);

// Outputs

FAIL

It fails all. We will not get output for the second API call.

According to the types definition, Promise.all will reject if any promise is rejected.

// Type definition

/**

* Creates a Promise that is resolved with an array of results when all of the provided Promises

* resolve, or rejected when any Promise is rejected.

* @param values An array of Promises.

* @returns A new Promise.

*/

//all<T>(values: (T | PromiseLike<T>)[]): Promise<T[]>;

To solve this issue, we can create a very basic utility function collectAll.

const collectAll = (...promises) => {

return new Promise(r => {

const results = [];

let counter = 0;

const final = (index, res) => {

results[index] = res;

counter++;

if (counter === promises.length) r(results);

};

promises.map((promise, index) => {

promise

.then(final.bind(null, index))

.catch(final.bind(null, index));

});

});

};

let all = collectAll(resolve(), resolve());

all.then(console.log)

all = collectAll(resolve(), reject());

all.then(console.log)

// Output

[ 'SUCCESS', 'SUCCESS' ]

[ 'SUCCESS', 'FAIL' ]

Code: https://github.com/deepakshrma/how-not-to-write-code/blob/master/js/promis_merge.js

Thanks for reading. If you like this article please let me know in the comment. and Please subscribe to my feeds for a more coding blog like this. You can find all code here in GitHub.